2019 AWS Machine Learning Research Award

Graph Generative Adversarial Imitation Learning

for Choreography Generation from Music

for Choreography Generation from Music

Principal Investigator: Taehee Kim, Ph.D., Youngsan University, Republic of Korea

Abstract

Learning to generate dancing motions conditioned on music is claimed

to be a fascinating AI challenge since dancing is a highly cognitive human behavior.

We propose a machine learning project that generates choreography from music as

humans would do the same when they learn a new dance. Among many potential machine

learning approaches to consider, we propose Graph Generative Adversarial Imitation

Learning (GGAIL) model: a model-free imitation learning model combined with generative

adversarial networks (GAN) that utilizes graph neural networks (GNN) to imitate complex,

high-dimensional dancing motions. In this work, GNN will give rise to complex dynamics of

choreographic motions at the level of individual joints and in the agent as a whole. As for

training data, we extract skeleton data from K-Pop (Korean Pop Music) from Youtube videos

using an open-source 3D pose estimation tool. We will collect at least one hundred dance

data with corresponding music for model training. We will observe how GGAIL agent would

carry out given dance-music relationships. We expect an agent that would show lively,

sophisticated performance. Our long-term objective is to build a service that suggests

artistic choreography from music given by user.

Collecting Motion Data

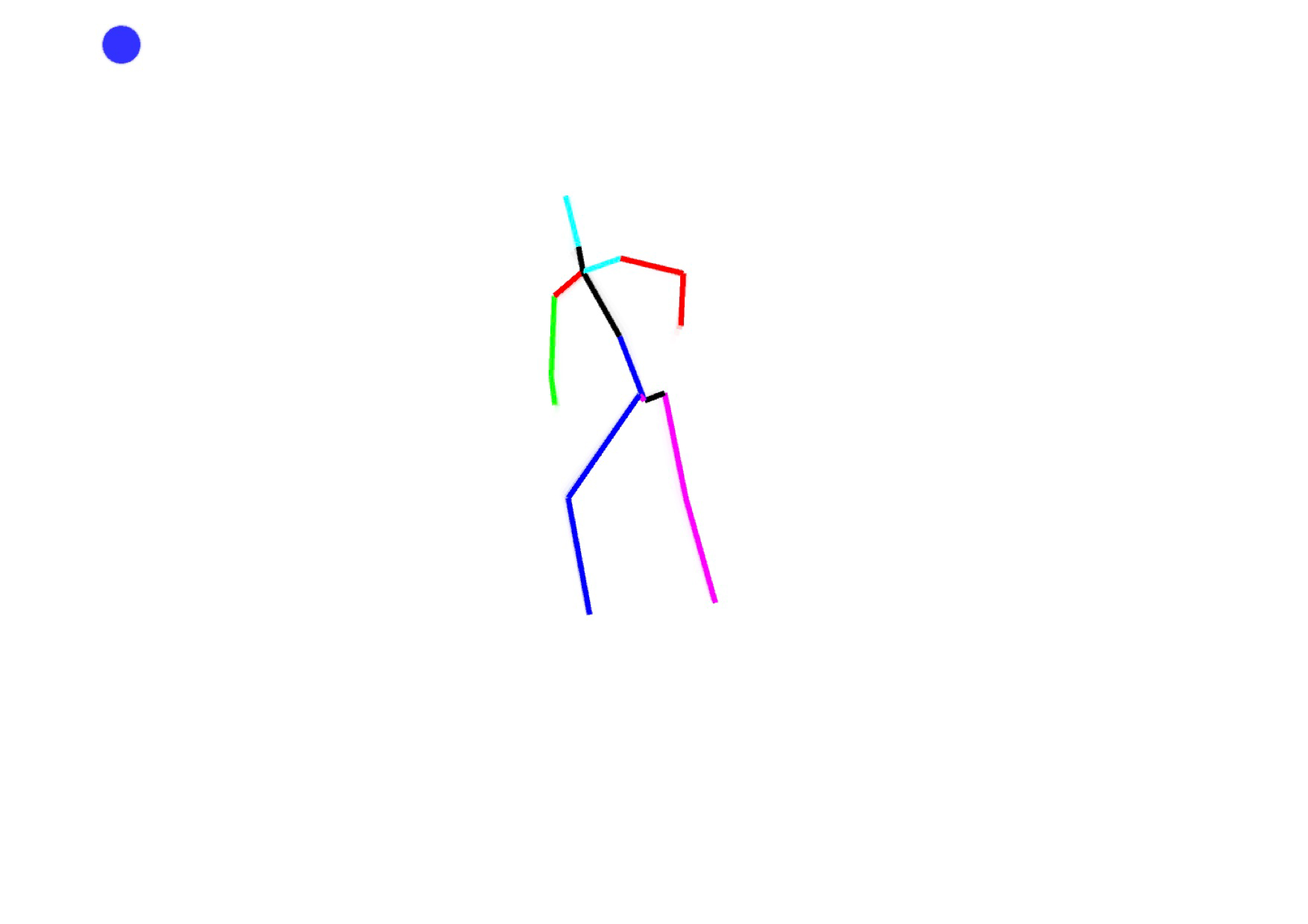

We collect motion data from K-Pop Youtube movies using

a deep learning-based pose estimation open source. –

Lifting from the Deep

Extracting Motion Vectors

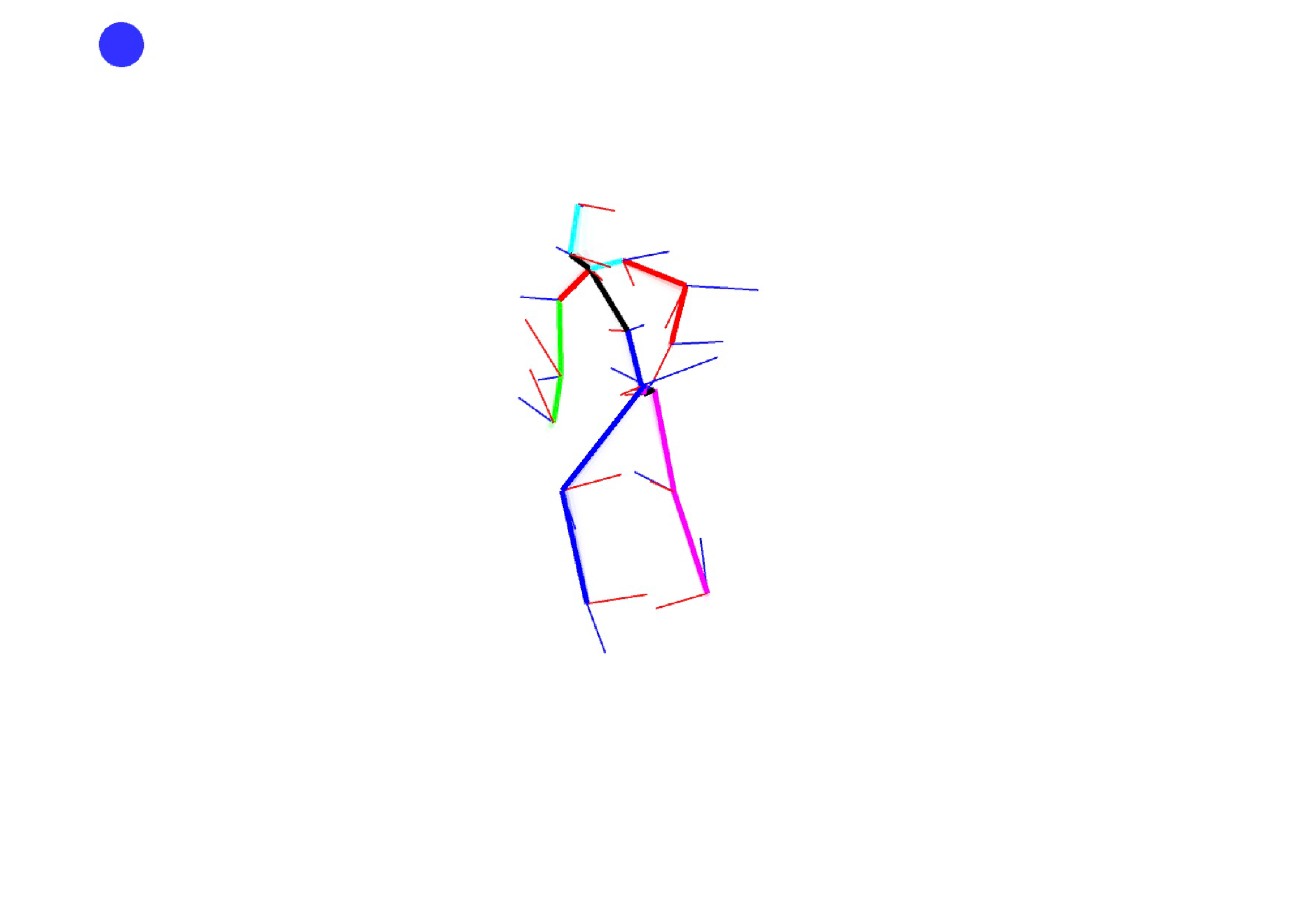

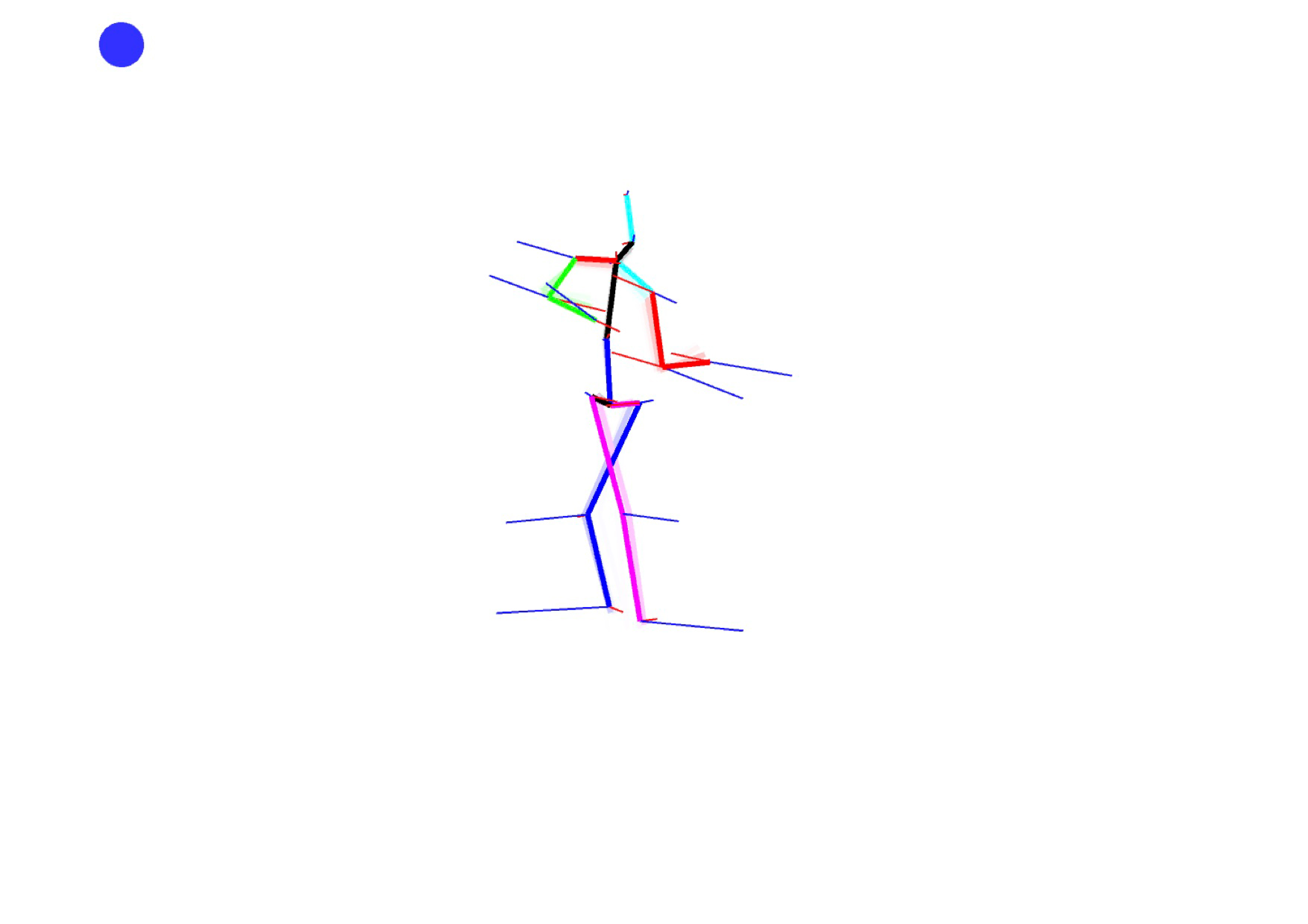

We extract onset events from music that is shown blinking blue dot top-left corner.

One of the fundamental features of dancing, we suggest, is generating motions on an

onset with respect to the previous motion toward that onset. Therefore, we want to

have our system to learn the relationship between joint motions toward an onset and

those after that onset. This is our initial approach to dancing. We will then move

on to build more abstract competence on top of it afterwards. So, we extract skeletal

motion data from before and after motions on every onset, and we call them “motion vectors.”

Motion vectors are shown by thin lines on joints where red colored lines show the motion

vectors toward an onset and the blue ones after that onset.

The movie below is an stick figure animation from poses extracted from a choreography movie

here.

We are currently working on implementation of dance learning based on the motion vector method as the first step.